In a recent interview with Rick Beato, who recently tested 2 AI music generators, Dream Theater keyboard wizard Jordan Rudess demonstrated something remarkable: an AI system that plays music in his style and responds to him in real time. This MIT Media Lab collaboration points to exciting new possibilities for musicians and technology.

From Classical Prodigy to Tech Innovator

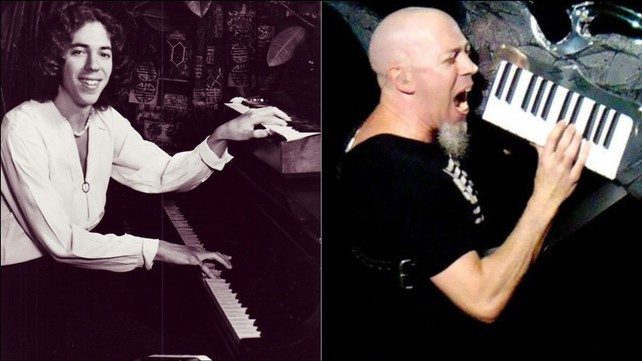

Imagecredit: Ultimate Guitar

Imagecredit: Ultimate Guitar

“I was a classical piano prodigy,” explains Rudess. “I went to Juilliard when I was nine.” His strict classical education discouraged exploring rock music, but everything changed when he was about 17. Friends introduced him to bands like Genesis and Emerson, Lake & Palmer, and a new world opened up.

The breaking point came during a lesson with his “annoying” teacher. After being scolded for not memorizing a lengthy Frédéric Chopin piece in just one week, Jordan realized: “I thought about my Mini Moog and all these things I really wanted to do, and I said, ‘I got to get out of here.'”

Unlike his Dream Theater bandmates, Rudess’s career “started very late.” He supported himself playing show tunes while figuring out his path, even working for synthesizer companies like Korg and Kurzweil, which deepened his understanding of music technology.

Teaching a Computer to Play Like Jordan Rudess

The highlight of the interview is Rudess demonstrating his AI music project with MIT. The concept is brilliantly simple: an AI trained on Rudess’s playing style that can have a real-time musical conversation with him.

“I was like, ‘How about if I teach it to play like a Jordan kind of style?‘” he explains. The training process involved recording himself improvising for 30 minutes in specific styles. These recordings were then used to train the AI model.

What makes this project special is its interactive nature. During the demonstration, Rudess and his AI partner trade musical phrases, with the AI responding to Rudess’s playing with complementary ideas that maintain his style while introducing variations.

“What’s interesting is for me to play something and the model responds, ‘Oh, he did that. Now I’ll go from there and maybe introduce a new idea,‘” Rudess explains. “And then I have to be on my toes to respond.“

According to the MIT Media Lab’s project, they call this “Symbiotic Virtuosity” – humans and computers making music together, learning from each performance.

GeoShred: Making Music More Accessible

Before showing his AI project, Rudess demonstrates GeoShred, an app he developed with Dr. Julius Smith at Stanford. This touchscreen interface combines the precision of keyboard playing with the expressive bending of guitar playing.

What makes GeoShred special is its intelligent touch control. When you touch a note, it’s perfectly in tune, but when you move your finger, you can bend between notes. The “secret sauce” is that when your finger stops moving, it automatically snaps to perfect pitch.

“when I’m playing it, I totally feel that kind of wild energy like I’m just controlling this beast,” Rudess says.

The app has found unexpected popularity in India, where musicians appreciate its ability to play microtones needed in Indian music. GeoShred also makes music more accessible by letting users limit notes to specific scales, making it impossible to play “wrong” notes.

Learning Guitar at 64 & The Human Element

Rudess recently began learning guitar at age 64, playing “almost every day” since. “People ask me this all the time. I’m like, ‘Yes, you can learn to play incredibly well,‘” he encourages. This led to a collaboration with Strandberg on a Jordan Rudess signature model guitar with visual fretboard guides and a sustaining pickup that appeals to his keyboard sensibilities.

Despite his enthusiasm for AI, Rudess maintains a balanced view on its role in music. When asked if AI will eventually play as well as him, he offers a thoughtful response: “If you’re going to see Dream Theater, you’re coming to see human beings because there is a story there. They’ve been following John Petrucci since they were kids… people actually love when a musician isn’t perfect.“

This human element—the personal story and beautiful imperfections—is what Rudess believes will always distinguish human musicians from AI. “The AI doesn’t have a story like a person does,” he notes.

Rudess’s approach offers a middle ground in the often polarized discussions about AI and music. Rather than replacing musicians, his AI serves as a collaborative tool that responds to and enhances human creativity—a musical conversation partner that challenges and inspires.

Through both his classical rebellion and AI innovation, Rudess demonstrates that technology works best when it amplifies the human qualities that make music meaningful: creativity, imperfection, and personal connection.